🚀Deploy a Custom Model in Tofu5

This function is based on the Tofu5 VMware image.

Recommended VMware version is VMware® Workstation 16 Pro or later version.

We will provide video tutorial guides in the resource package to help you quickly master the usage.

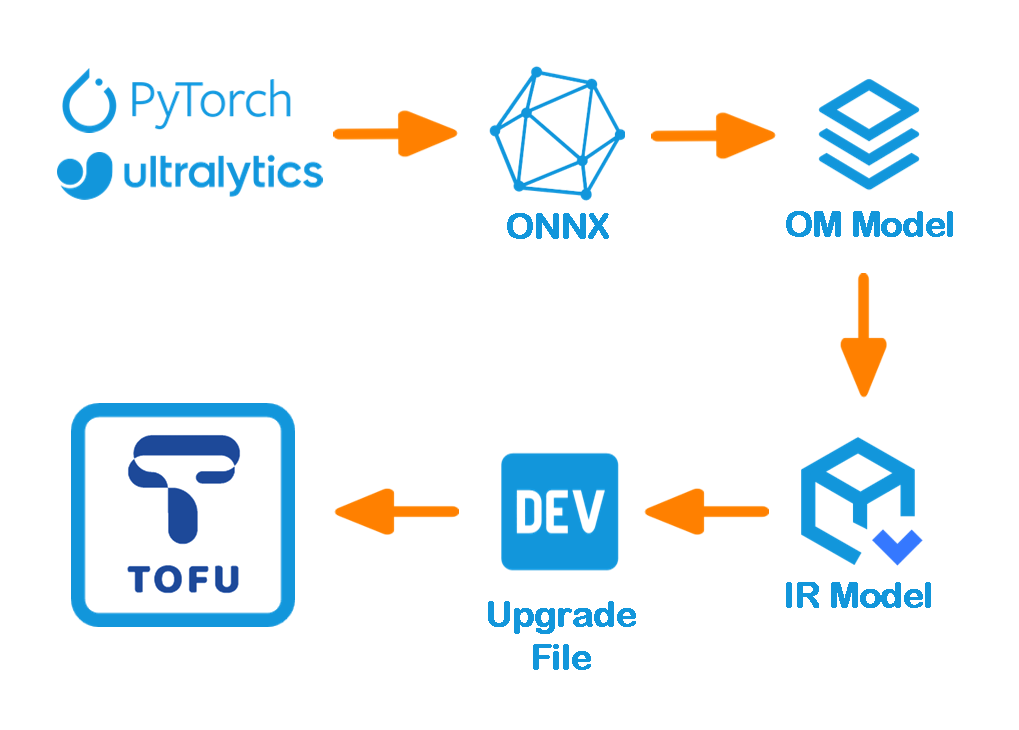

1. Model Quantization

The model quantization method is exactly the same as that used by Ultralytics. We have modified the original code of the model export process to adapt it for model inference with the Tofu5 module.

The model input is at a resolution of 3x640x640, model type is yolov8s, supports detection models with up to 4 classes.

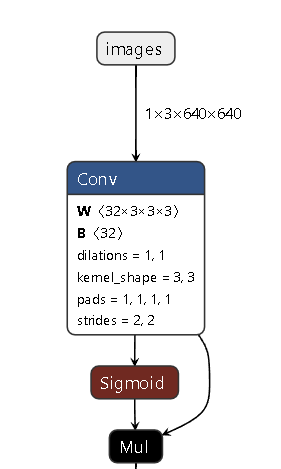

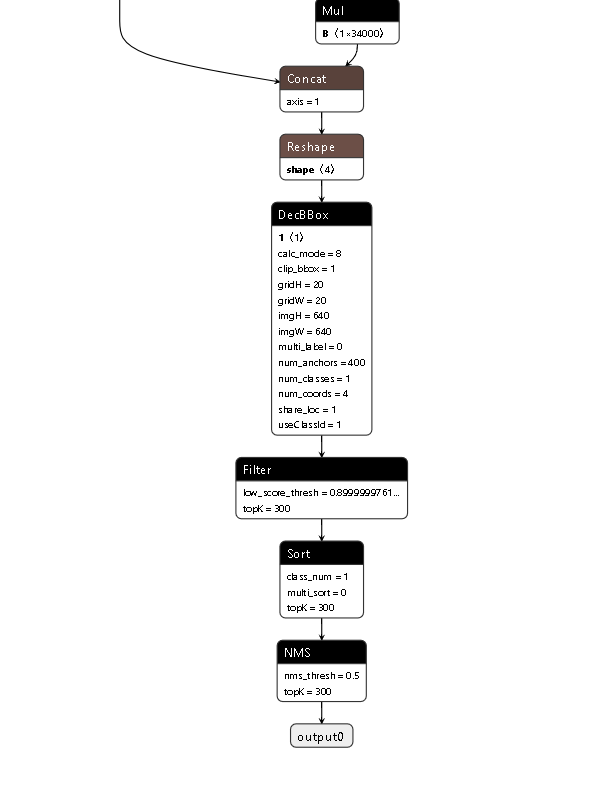

Check your model in Netron to ensure it ends with a detect layer.

You can modify the /home/littro/OnnxModel_export/export_nnie.py file to export your own .pt model to an .onnx model

from ultralytics import YOLO

model = YOLO("./model/tank.pt")

success = model.export(format="onnx", opset=13)After model quantization, You can obtain the ONNX file in the /home/littro/OnnxModel_export/model folder. Please use Netron to view and check the ONNX network.

We provide the Netron software in the /home/littro/Netron folder.

Enter the following command to run Netron, then open the model file for browsing.

/home/littro/Netron/Netron-***.AppImage

# Replace *** with the actual version number of the AppImage fileThe correct network should end as shown in the figure below.

2. Deploy the Custom Model

2.1 Environment Check

Use the administrator account and run the following commands in sequence to check the ATC environment.

sudo su

# Enter your password when prompted

atc --versionIf the information below is printed, the environment is normal.

Mapper Version ***2.2 Prepare Files

.onnx file(converted from a.ptmodel). We have provided a reference.onnxfile located in/home/littro/Model/yolov8/.Reference images and a .txt image list file. We have provided reference images and a.txtfile located in/home/littro/Model/coco/.- A folder for storing the exported model (create it in advance if it does not exist).

2.3 Export Model

Use the administrator account and run the following commands to switch to administrator mode.

sudo su

# Enter your password when promptedRun the code after modifying the content.

🟢 Reference command:

atc --dump_data=0 --input_shape="images:1,3,640,640" \

--input_type="images:UINT8" --log_level=0 --online_model_type=0 --batch_num=1 \

--input_format=NCHW --output="/home/littro/Model/yolov8/om_model/tank" \

--soc_version=Hi3516DV500 \

--insert_op_conf=/root/modelzoo/tank/Hi3516DV500/insert_op.cfg \

--framework=5 --compile_mode=0 --save_original_model=false \

--model="/home/littro/Model/yolov8/tank.onnx" \

--image_list="images:/home/littro/Model/coco/imagelist_20240611134217.txt" Sections that need to be modified manually:

🟢 output: The output path.

The refence in the code is:

--output="/home/littro/Model/yolov8/om_model/tank"Output .om model will be named tank.om located in the /home/littro/Model/yolov8/om_model/ foulder.

🟢 model: The path and name of the ONNX model.

The refence in the code is:

--model="/home/littro/Model/yolov8/tank.onnx"🟢 image_list: Path to the .txt file containing the list of reference images for model generation. The list must include 20 to 50 images from the training dataset (ensure the images are consistent with the model's training data distribution to avoid quantization errors).

The refence in the code is:

--image_list="images:/home/littro/Model/coco/imagelist_20240611134217.txt"The image list is saved as a.txtfile,with the format as follows:

/home/littro/Model/coco/000000000036.jpg

/home/littro/Model/coco/000000000049.jpg

/home/littro/Model/coco/000000000061.jpg

/home/littro/Model/coco/000000000074.jpg

/home/littro/Model/coco/000000000077.jpg

/home/littro/Model/coco/000000000086.jpg

/home/littro/Model/coco/000000000109.jpg

/home/littro/Model/coco/000000000110.jpgWe provided the reference images and .txt file located in /home/littro/Model/coco/.

The model export process will take several minutes.

Once the export is successful, the resulting file will be in the .om format.

2.4 Model Packaging

Run the packaging script.

First, switch to the script directory (path: /home/littro/Model/yolov8/), then run the packaging script

# Switch to the script directory

cd /home/littro/Model/yolov8/

# Run the packaging script

./encryptmodel tank.om model_custom.irAfter running, the packaged model file named model_custom.ir will be obtained.

2.5 Create an Upgrade Package

Script path:/home/littro/Model/updpkg_custom_script

Copy the generated model_custom.ir model to the specified path and run the script.

./updpkg_custom_model_vis.sh ./model_custom.irPlease note that the tank.om model is generated under the administrator account. If you copy the file to the target directory using a regular user, run the following command to grant read/write/execute permissions (replace file_name with the actual file name, e.g., model_custom.ir):

bash Copychmod 777 file_name

After running,the .dev upgrade package will be generated automatically.

This upgrade package can be used for remote upgrades on Tofu5.

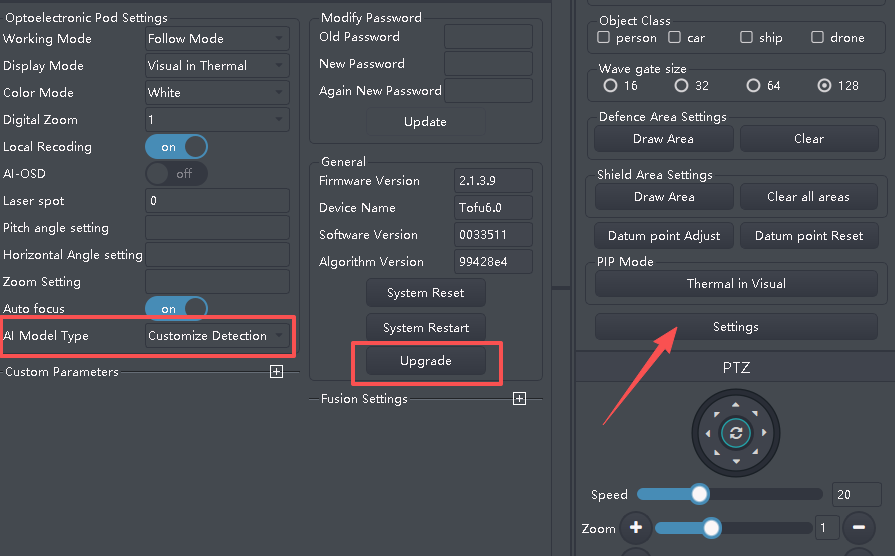

3. Model Upgrades and Testing

For detailed upgrade procedures, please refer to the TVMS software user manual[click here].

After the upgrade is completed, enter the Tofu5 device settings interface, find the AI Model Type option, and change it to Customize Detection.

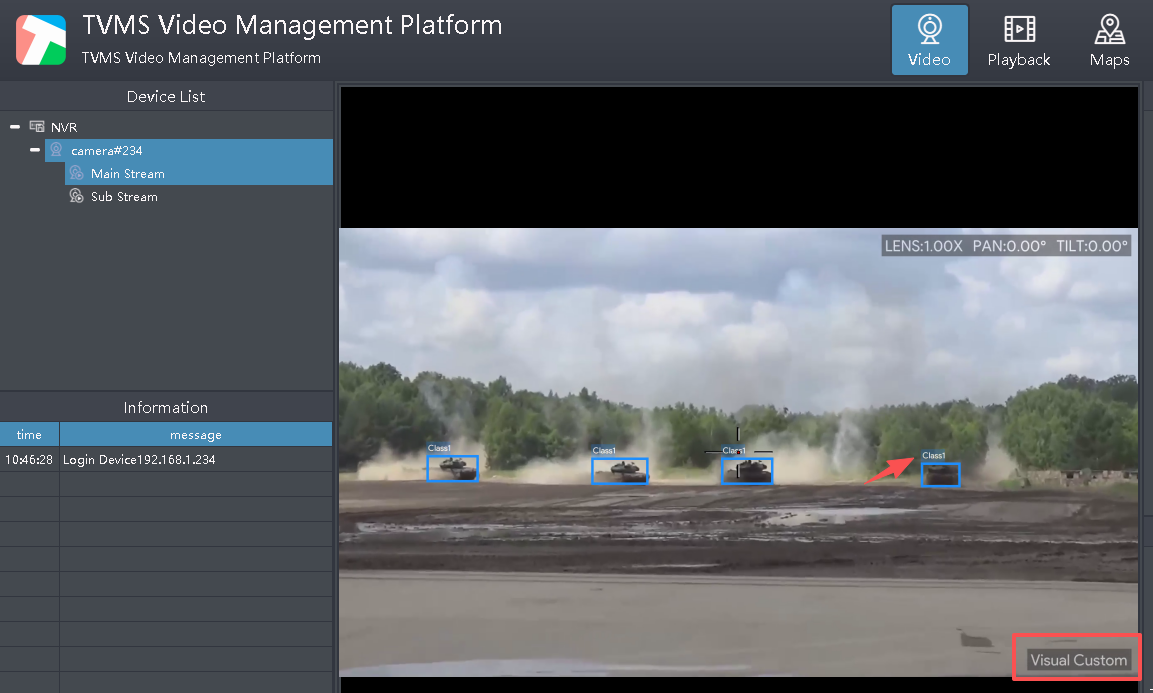

The result of the demo model is shown below; it can detect tanks in the video.

- 🔭AI Camera Product List

- 📷PTZ Camera List

- 📷TofuM5T PTZ Camera

- 📷TofuMS2 PTZ Camera

- 📷TofuMS3 PTZ Camera

- 📷TofuLT PTZ Camera

- 📷TofuUZ PTZ Camera

- 🔎Tofu Function List

- ♾️Tofu Module Comparison

- 🔥Tofu3 EdgeAI Module

- 🔥Tofu5 EdgeAI Module

- 🔥Tofu5C EdgeAI Module

- 🔥Tofu6 EdgeAI Module

- 🛰️Visual Satellite Image Analyse

- 🔍How to choose lens